In the rapidly evolving landscape of artificial intelligence (AI), ensuring its responsible and trustworthy use is a pressing concern. During a recent Responsible AI meeting, the Open Voice Trustmark delivered a presentation to illuminate the community about its ongoing initiatives dedicated to nurturing trust and responsibility in AI technologies. Below is a recap of the session, shedding light on the Trustmark’s mission, focus areas, principles, strategic pillars, and collaborative efforts.

Mission and Membership: Advocating for Responsible AI

Launched under the umbrella of the Open Voice Network in 2020, the Trustmark project transitioned to become an independent initiative of LF AI & Data in November 2023. Its mission is clear: to educate and advocate for the responsible and trustworthy use of technology. With a diverse membership comprising global representatives from various domains including security, technology, law, ethics, academia, entrepreneurship, and user advocacy, the Trustmark embodies a holistic approach to addressing the multifaceted challenges of AI.

Focus Areas: Ethical Imperatives

The Trustmark’s focus areas encompass ethical use, privacy and security, health and wellness, media and entertainment, and K16 education. Recognizing that trustworthy AI consists of three key components – legality, ethics, and robustness – the Trustmark emphasizes adherence to applicable laws, ethical principles, and technical and social robustness to mitigate unintended harm. Trustworthy AI, as defined by the Trustmark, embodies characteristics such as validity, reliability, safety, security, accountability, transparency, explainability, interpretability, privacy enhancement, and fairness.

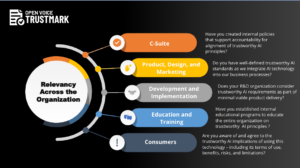

Principles and Stakeholder Engagement

Central to the Trustmark’s framework are six key principles: privacy, transparency, accountability, inclusivity, sustainability, and compliance. These principles guide stakeholders across the AI lifecycle – from the C-suite to product design, development, education, training, and consumer engagement – in ensuring that AI solutions align with societal values, legal requirements, and best practices.

Strategic Pillars and Risk Mitigation

The Trustmark’s strategic pillars encompass endorsement, education, intention, and evidence. By garnering support for ethical principles, providing educational resources, facilitating self-assessment tools, and promoting best practices, the Trustmark aims to mitigate risks associated with AI, including privacy breaches, security threats, performance issues, lack of control, economic ramifications, and societal implications. Through a nuanced understanding of technology and business risks, the Trustmark seeks to empower organizations and individuals with risk mitigation strategies tailored to their needs.

Industry Influence and Collaborative Endeavors

Collaboration is central to the Trustmark’s approach, spanning across public and private sectors, regulatory bodies, advocacy groups, and academic institutions. From participating in global initiatives like the OECD Global Challenge to Build Trust task force to partnering with industry leaders such as the Bill & Melinda Gates Foundation, The Linux Foundation, and Bridge 2AI, Trustmark harnesses a diverse range of expertise to shape the future of AI responsibly.

White Paper Publications and Future Endeavors

The Trustmark’s contributions extend beyond webinars, with a series of white paper publications covering ethical considerations, privacy principles, data security, healthcare innovations, synthetic voice technologies, and the future of media and entertainment. Furthermore, upcoming initiatives include training courses on generative AI ethics and risk mitigation strategies, along with the launch of a self-assessment and maturity model tool to aid organizations in assessing their trustworthy AI practices.

In conclusion, the Open Voice Trustmark webinar serves as a testament to the collective commitment towards fostering trust, transparency, and responsibility in the realm of AI. Through ongoing education, advocacy, and collaboration, the Trustmark endeavors to navigate the complex landscape of AI ethics and ensure that technology serves humanity’s best interests.

For more insights, check out the Linux Foundation Ed/X Training Course, explore our white paper publications and stay tuned for upcoming initiatives aimed at advancing the responsible use of AI. Together, let’s shape a future where AI technologies empower and enrich lives while upholding ethical standards and societal values.

White paper publications:

- Ethical Considerations for Conversational AI – link

- Privacy Principles and Capabilities for Conversational AI – link

- Data Security for Conversational AI – link

- Advancing Health Care Innovations through Conversational AI – link

- Synthetic Voice for Content Owners and Creators – link

- The Future of Media and Entertainment Informed by Voice – link

Listen to the full webinar here.