Authors: Alessandro Pomponio, Srikumar Venugopal, Michael Johnston

Generative AI model development, including tasks such as training, fine-tuning, and inferencing, is often performed in cloud environments thanks to the ease of access to compute resources like GPUs. Here, the model weights — which can be very large in size — are usually stored using S3-compatible object storage services.

To access data in S3, the development team needs to be aware of configuration details, including the endpoint address, bucket name, and credentials. This can create a security risk as more people will have access to sensitive information. Additionally, this can impact collaboration if changes to the configuration are necessary. Changes must in fact be propagated to all team members to avoid disruptions, such as developers using the wrong bucket. This issue becomes more significant as the number of buckets used by the team increases.

Secret management solutions have effectively addressed the first challenge. The challenge of S3 configuration management across a team, however, remains difficult. We decided to address this problem in Kubernetes by creating Datashim, an open-source project that separates data access management from data consumption.

With Datashim:

- Data owners define Datasets, objects that link S3 configuration and credentials.

- Data users reference Datasets in their workloads. Datashim will automatically and transparently ensure the data is available.

This clear separation of data access from data consumption allows data owners to manage S3 access information while data users deploy workloads without needing those details. Datashim is an incubation project in the LF AI & Data Foundation.

A use case: model development on Kubernetes

To better understand how Datashim can help you, consider the common scenario of a model development team working with a staging and a production environment. On Kubernetes, this means having two namespaces, each featuring an inference server deployment. The staging inference server will use the latest available checkpoint and configuration while the production one will use a stable model and configuration to serve client requests. Hugging Face’s Text Generation Inference (TGI) service might be used for this purpose, reading weights from separate buckets, one for staging and one for production.

Without Datashim, Deployment specifications would have to include references to the S3 access credentials or volume mounts, with the latter method requiring the installation of a Container Storage Interface (CSI)-compatible S3 attacher, along with the creation and management of the related Persistent Volumes (PVs) and Persistent Volume Claims (PVCs).

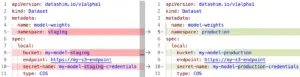

With Datashim, data owners can define two Datasets, one in the production and one in the staging namespace by applying the following YAMLs:

Differences between a “staging” and a “production” dataset — Full YAMLs available in the Datashim repo.

Datashim’s operator will automatically provision the corresponding PVs and PVCs using its built-in CSI-S3 attacher. Accessing the Dataset in the deployment then only requires referencing it via the spec.template.metadata.labels field of the deployment. There is no longer a need for references to volumeMounts, S3 endpoints, or credentials, and the same deployment YAML will be valid for both namespaces.

As a result, updates to the TGI Deployment made in the staging environment can be applied in the production space, safe in the knowledge that the production model weights will be used. The YAML is also simpler, avoiding the need for the Deployment owners to create, manage, and reference PVCs and PVs. Further, if the production or staging weights need to be moved, a simple redeployment is all that is needed.

Under the hood

Behind the scenes, the Dataset is a Kubernetes Custom Resource Definition (CRD) managed by the Datashim Operator. When a Dataset is created, the operator generates a Persistent Volume Claim and communicates with the appropriate CSI driver to provision the corresponding Persistent Volume. The operator will then manage the lifecycle of all these resources, so that the user does not have to.

The Dataset specification has been designed to be generic and provider-independent, allowing it to support various storage backends, including S3, NFS, and HostPath. We invested a significant amount of effort in ensuring a seamless experience with all these backends, especially S3. This required extending an open-source implementation of CSI-based volumes for S3 to support essential features such as on-demand bucket provisioning, a “ReadOnlyMany” volume access mode for S3 buckets, and the creation of volumes on bucket prefixes (or S3 “folders”) to isolate workloads to a subset of the data in a bucket.

We have also simplified the process for end users by hiding any complexity related to volumes using a MutatingWebhook. This webhook intercepts pod definitions and translates labels, such as dataset.0.id: model-weights, into volumes and volumeMounts in the resulting pod after ensuring that the dataset and corresponding PVCs are present in the namespace.

Roadmap

We have several features planned for inclusion in Datashim over the coming months. One of these features is adding support for more storage provider backends through the use of CSI APIs. For object storage, we plan on supporting IAM keys in addition to traditional credential pairs.

Try it yourself

Datashim v0.4 is out. Give it a try and share your feedback, and have a look at example files for this use-case.

- Datashim’s GitHub repository: https://github.com/datashim-io/datashim

- Try our walkthrough on using Datashim for serving AI models.

- Try the staging/production use case from this post.

- See how to configure A/B testing of Gen AI models using Datashim.