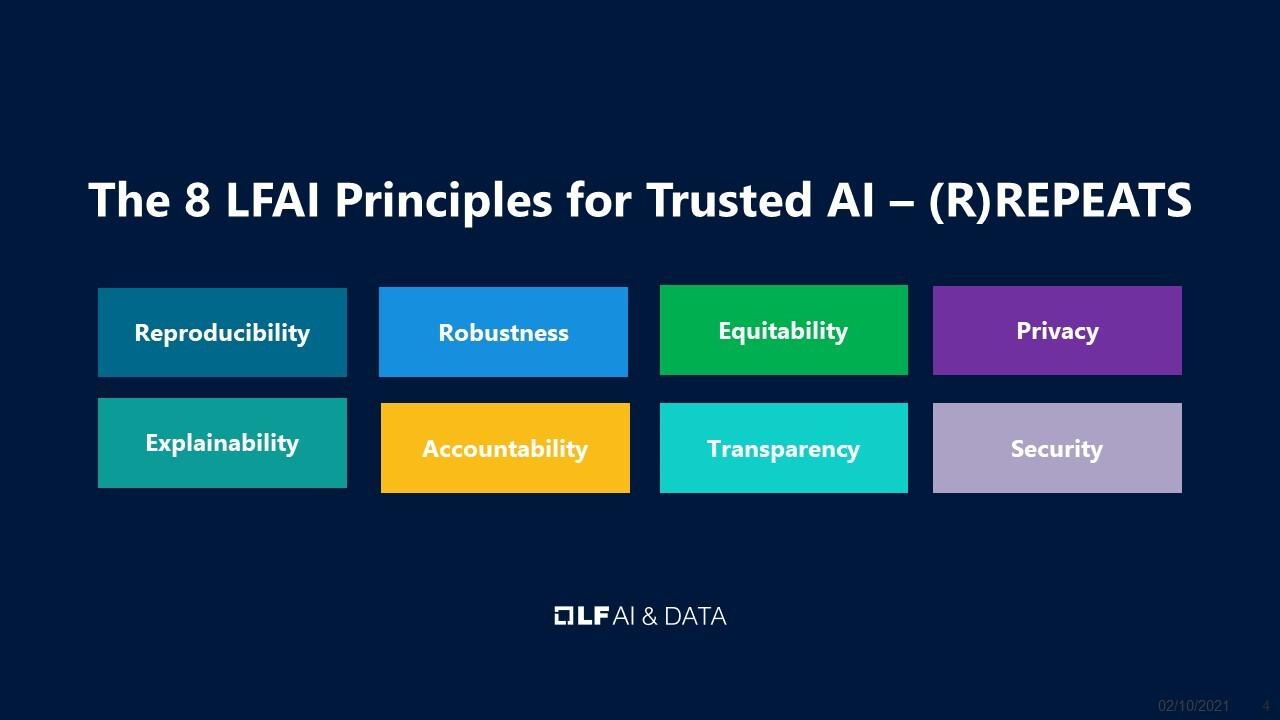

Principles for Trusted AI

The (R)REPEATS acronym captures the Trusted AI Principles of Reproducibility, Robustness, Equitability, Privacy, Explainability, Accountability, Transparency, and Security. The principles in the (R)REPEATS image are presented in no particular order other than to match the acronym, as they are of equal importance. To find out more about the Principles for Trusted AI please check the blog LF AI & Data Announces Principles for Trusted AI.

Participants