By Ofer Hermoni and David Edelsohn

In December 2023, the authors of this blog, David Edelsohn and Ofer Hermoni, had an enriching opportunity to attend a thought-provoking session at the AI.Dev event, titled “Towards the Definition of Open Source AI,” moderated by the insightful Mer Joyce and Ruth Suehle. The authors and other AI and open-source professionals joined together to create an initial proposal for the definition of Open Source AI.

We will come back to this workshop soon, but first thing first we would like to explain what this blog post is all about. We start with the importance of Open Source AI, then explain the complexity of the definition of Open Source AI, and then share our own (current) thoughts about the problem. We conclude by inviting you to join the effort!

Open Source AI is critical to the future direction of and broad availability of AI for several reasons. Firstly, it democratizes access to advanced technology, allowing a broader community, including researchers, developers, and businesses, to innovate and contribute. This inclusive approach accelerates technological advancements and fosters a collaborative ecosystem.

Secondly, open-sourcing AI models promote transparency and accountability. By having open access to the algorithms, software, and models, stakeholders can scrutinize and understand the decision-making processes of AI, which is crucial for ethical considerations and bias mitigation.

Furthermore, open-source AI nurtures an educational environment, offering learning opportunities to students and professionals alike, and enhancing skill development in this critical field.

Additionally, open-sourcing AI aligns with a broader movement towards shared knowledge and collective progress in technology, embodying the spirit of the Open Source Initiative and reinforcing the ethos of community-driven innovation. This approach not only fuels technological advancement but also ensures that these advancements are accessible and beneficial to a wide range of users, fostering a more equitable tech landscape.

AI, especially Generative AI, has exploded in the past year through rapid technological development and innovative deployment. AI is expanding in all fields, from entertainment to drug and material discovery to weather forecasting. AI models are being developed for all forms of media – writing, images, video, and music. An AI Application consists of more components than a traditional software application, and the definition of Open Source needs to adapt and expand appropriately. Amidst all of this change, the process for AI training and inference remains consistent.

We believe that the key insight is determining which components of the AI Application and its construction process need to be open to fulfill the original intent of Open Source. A sufficiently general definition of Open Source AI has a good chance to stand the test of time as AI continues its rapid transformation.

Coming back to the workshop… The core mission of this workshop was monumental – to carve out a clear and universally acceptable definition of Open Source AI. This session was part of a wider initiative by the OSI (Open-Source Initiative) organization to define Open Source AI by October 2024 (you can find the latest draft here).

Our team, which included some of the brightest minds in the domain, could not come up with an easy and clear definition. Unlike Open Source software, where guidelines are well-established by Open Source Initiative (OSI), AI introduces layers of complexity that challenge conventional frameworks.

To understand the complexity of this task, we need to break down the four freedoms dictated by the conventional open-source software definition, and the complexity of AI. For that, we will take an example of AI models, which are complex artifacts with multiple pipelines.

The four freedoms:

- Use the system for any purpose and without having to ask for permission.

- Study how the system works and inspect its components.

- Modify the system for any purpose, including to change its output.

- Share the system for others to use, with or without modifications, for any purpose.

Open Source Software does not define different requirements for the different freedoms, and neither should Open Source AI. Our team adopted a solution that mapped the pragmatic requirements of Open Source Software to Open Source AI: The AI Framework and the Trained AI Model are Open Source. A recipient of the AI project must have the ability to fine-tune, align, and prompt-engineer the model, but one does not necessarily have the ability to fully recreate the original model.

Open Source AI does not mean Open Science. It is not the availability of and access to every artifact, component, or input of the AI project and environment. Carrying the mantle of Open Source Software into the AI domain, users must be able to achieve the four freedoms: study, use, modify, and share the AI project. Open Source Software does not require that the project and community hand-hold the recipients of the software and provide all of its dependencies. Open Source AI can establish a similar, pragmatic balance.

Our team believes that just as the four Open Source freedoms map consistently to the various components of software, similarly the four Open Source freedoms map consistently to the components of Artificial Intelligence.

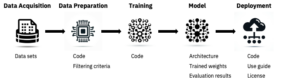

To understand the complexity of defining AI Open Source, we will explore at a high level the process of training and deploying a machine learning model. The process encompasses a series of interconnected steps, each pivotal to the model’s eventual performance and utility.

- Data Acquisition

The initial phase, Data Acquisition, involves identifying and gathering relevant datasets that will form the foundation of training. This step is crucial as it determines the quality and scope of information the model will learn from. It includes collecting data from various sources and ensuring the data is representative of the problem space to address real-world scenarios effectively. - Data Preparation

Following acquisition, the Data Preparation phase is essential for transforming raw data into a format suitable for machine learning. This includes cleaning data to remove inaccuracies or irrelevant information, normalizing or standardizing data to ensure consistency, and performing feature engineering to enhance model learning. - Model Development (Training)

Model Development or training involves selecting an appropriate machine learning algorithm and using the prepared data to train the model. This step is where the algorithm learns from the data by adjusting its parameters to minimize errors, a process that is iterative and requires careful monitoring to ensure optimal learning. - Model Evaluation and Tuning

After training, the Model Evaluation and Tuning phase assesses the trained model’s performance using unseen data. This crucial step verifies the model’s ability to generalize and perform reliably in practical scenarios. It often involves fine-tuning the model by adjusting its parameters or training approach based on performance metrics gathered from the validation and test datasets. The objective is to refine the model to achieve the best possible accuracy and efficiency before deployment. - Deployment and Documentation

The final phase, Deployment and Documentation, transitions the model from a development setting to a real-world application. This involves integrating the model into a production environment where it can process new data and provide insights or predictions. A key component of this phase is the creation of comprehensive documentation and a user guide, which facilitate the model’s use, ensuring users understand how to interact with the model effectively, interpret its outputs, and address potential issues.

| Study | Use | Modify | Share | |

| Code | Meets OSD | Meets OSD | Meets OSD | Meets OSD |

| Training Code | Don’t Care | Don’t Care | Don’t Care | Don’t Care |

| Model | Meets OSD | Meets OSD | Meets OSD | Meets OSD |

| Training Data | Don’t Care | Don’t Care | Don’t Care | Don’t Care |

| Data | Not Applicable | Not Applicable | Not Applicable | Not Applicable |

*OSD = Open Source Definition

The five components:

- Code

The source code of the AI model framework; the infrastructure in which the AI model is expressed. This component is exactly equivalent to Open Source software. - Training Code

The scripts, tools, and procedures to prepare the training data and then orchestrate the training process. This component is equivalent to the environment in which a particular Open Source software package is built or run, on which Open Source software definitions don’t impose requirements. - Model

The AI model algorithm, architecture, weights and biases, parameters, and hyperparameters, in a format that can be loaded into the AI Framework for which it was designed. This component must use a license that allows users and developers the ability to apply the four freedoms of Open Source software to the model. - Training Data

The various processed iterations of data used to train the AI model, including, the raw data corpus, ingested data, pre-processed data, filtered (de-duplicated and cleaned) data, data labeling, and reputation ranking of data. This includes training data, validation data, and test data. This component is material appropriate for Open Science, to completely reproduce the model and all artifacts. For example, Open Source software may use an entire Linux distribution for coverage testing or to choose parameters within the software, but the package is not required to provide an entire Linux distribution to be validated as Open Source. - Data

Data used for fine tuning, aligning, knowledge graphs, retrieval data, task specific data, and prompting engineering to elicit output from the model. This component is equivalent to the user input to an Open Source package, which is outside the definition of Open Source software.

Open Science would require all input and training data, including all tools and procedures, to create the model. Open Source AI provides the basic components and the results, with the ability to study, modify, share, and use the model, but without the requirement to completely reproduce the model. As with Open Source Software, users of Open Source AI have the ability to examine the model design, run the model, examine its output and behavior, and modify the model through fine-tuning and prompt engineering. The user could gather public data and tools to train a similar model. Open Source is consistent with batteries not included.

Our esteemed colleagues in the LFAI&Data Generative AI Commons have developed a wonderful framework enumerating model openness through 15 components spanning the entire model development and deployment process, including training data, model architecture, model parameters, evaluation benchmarks, and documentation among others. Different audiences and user communities require different levels of openness or specific characteristics of openness, with greater or lesser accessibility than Open Source AI or Open Science. Generative AI Commons is a wonderful forum to continue this broader conversation and we encourage participation to explore these topics.

Open Science or Open Source Hardware is a wonderful ideal, but not required for Open Source Software or Open Source AI to be effective, productive, useful, and spur innovation, experimentation, adaptation, and education.

In conclusion, we believe that there is a fine line that we, as a community, need to walk as we balance the requirements and expectations of the Open Source community and the AI community. As with Open Source Software, Open Source AI models must balance developer requirements, business requirements, and technical requirements. Artificial Intelligence models are a new territory and the communities need to develop a consensus to successfully adapt Open Source terminology to AI models to ensure that the field continues to thrive.

We invite you to connect with us and contribute to the definition of Open Source AI.

David Edelsohn – https://www.linkedin.com/in/davidedelsohn/

Ofer Hermoni – https://www.linkedin.com/in/ofer-hermoni/