Machine learning systems have revolutionized various industries by enabling automation, prediction, and decision-making based on vast amounts of data. However, as these systems become more prevalent and critical to organizations, ensuring their security becomes paramount. In a recent NeurIPS workshop keynote, Founding Chair of the ML Security Committee of the LF AI & Data Foundation Alejandro Saucedo highlighted the security challenges and best practices in production machine learning systems. This blog post aims to summarize the key insights from the workshop and emphasize the importance of incorporating security measures throughout the machine learning lifecycle.

- Security Challenges in ML Systems

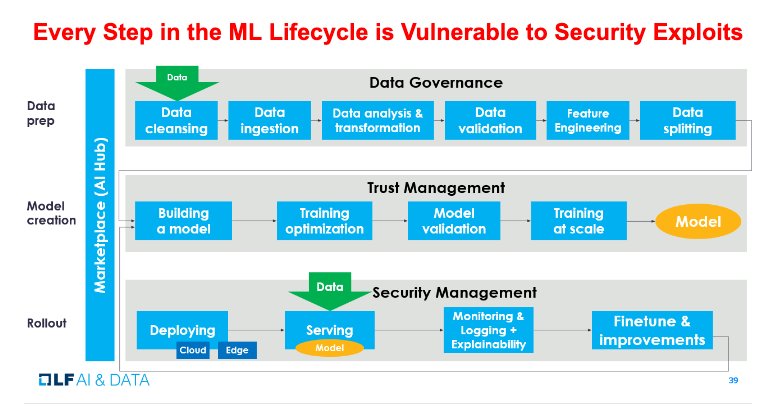

Machine learning systems introduce unique security challenges due to their complexity, dependencies, and data flows. Unlike traditional software, ML systems consist of not just code, but also artifacts, inputs, outputs, training data, configurations, and environments. Furthermore, operationalizing ML models adds another layer of complexity as they are deployed in various environments and may undergo multiple iterations. This necessitates specialized security practices and resources.

During the workshop, Alejandro discussed specific vulnerabilities found in ML systems. One such vulnerability involves the use of pickle files, a common artifact format in the Python ecosystem. These files can be exploited to execute arbitrary code, leading to potential security breaches. Efforts are being made to introduce artifact signing and scanning tools to ensure the trustworthiness of artifacts.

Adversarial attacks were also highlighted as a significant concern. These attacks involve creating input examples that deceive ML models while appearing normal to humans. Detecting and mitigating adversarial instances requires careful implementation and monitoring. Another critical aspect of securing ML systems is managing dependencies. Python’s dynamic dependency resolution poses challenges, as unpinned dependencies can introduce vulnerabilities. Projects like ml server aim to address this issue and effectively manage dynamic dependency supply chain vulnerabilities.

- Best Practices and Resources

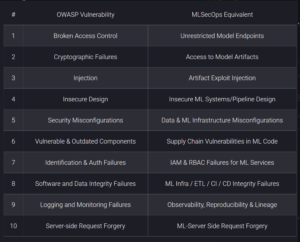

To address the security challenges in ML systems, it is essential to adopt best practices similar to those used in the general software space. While creating completely unhackable systems may be impossible, a reasonable level of security can be achieved through best practices, tooling, and automation. One valuable resource highlighted by Alejandro is the OWASP Top 10, which provides security principles applicable to ML systems. Additionally, the MLSecOps Top 10 focuses specifically on vulnerabilities unique to ML systems, helping practitioners focus on effective security practices.

Source: https://ethical.institute/security.html

Source: https://ethical.institute/security.html

To mitigate security risks, Alejandro recommended several best practices and tools. These include using artifact signing, implementing adversarial detectors, conducting thorough code and artifact reviews, and adopting secure deployment practices. Dependency management can be improved by leveraging tools like safety and pip rot to check for vulnerabilities. Security scans on ML artifacts and containers using tools like Trivy can provide additional assurance. Collaboration and communication between different teams involved in ML systems, such as machine learning engineers, DevOps engineers, and data ops engineers, are also crucial for addressing security challenges effectively.

Conclusion

Securing machine learning systems requires a holistic approach that goes beyond relying solely on tools. It involves considering factors like infrastructure security, access control, encryption, and pipeline hardening. By implementing security measures at each stage of the machine learning lifecycle and following best practices, organizations can establish a foundation of trust and mitigate potential risks. Staying updated on the latest developments in machine learning security through resources like the LF AI & Data ML Security Committee’s work is crucial for ongoing efforts to ensure trust and security in machine learning systems.

Additional Resources:

- Alejandro Saucedo’s NeurIPS workshop keynote video

- MLSecOps Top 10

- Production Machine Learning Monitoring: Principles, Patterns, and Techniques