“The Linux Foundation is proud to champion a vision where openness drives progress, fostering an ecosystem that enables collaboration, innovation, and responsible adoption of transformative technologies like generative AI (GenAI). Initiatives such as the Generative AI Commons bring organizations together under a neutral umbrella to collaborate and fuel open source innovation. Our Model Openness Framework and companion tools empower model creators and users with practical, transparent guidance for building and adopting open AI systems.” By Ibrahim Haddad, PhD, Executive Director of LF AI & Data

Security remains a cornerstone of this transformation. As organizations embrace GenAI, safeguarding sensitive data and ensuring compliance with industry standards have become critical imperatives.

Open Source Security Foundation (OpenSSF), with its focus on securing open source software, plays a pivotal role in establishing best practices for developing secure AI systems. In 2024, the OpenSSF AI/ML Working Group launched a new project focused on model signing. This initiative is developing a proof of concept for model signing with Sigstore, aimed at enhancing trust and security for machine learning models. A stable release of this project is expected soon. Additionally, the working group has started collaborating with other AI security-focused groups to ensure effective information sharing across the community and with stakeholders. These initiatives exemplify the AI/ML Working Group’s broader efforts to address the security risks associated with AI systems. By providing guidance, tooling, and techniques, the group aims to support open source projects and their adopters in securely integrating, utilizing, detecting, and defending against AI technologies.

The Shaping the Future of Generative AI report provides valuable insights into how organizations prioritize security and balance it with performance, cost, and privacy in their GenAI adoption journey.

Survey Highlights: Security as a Driver

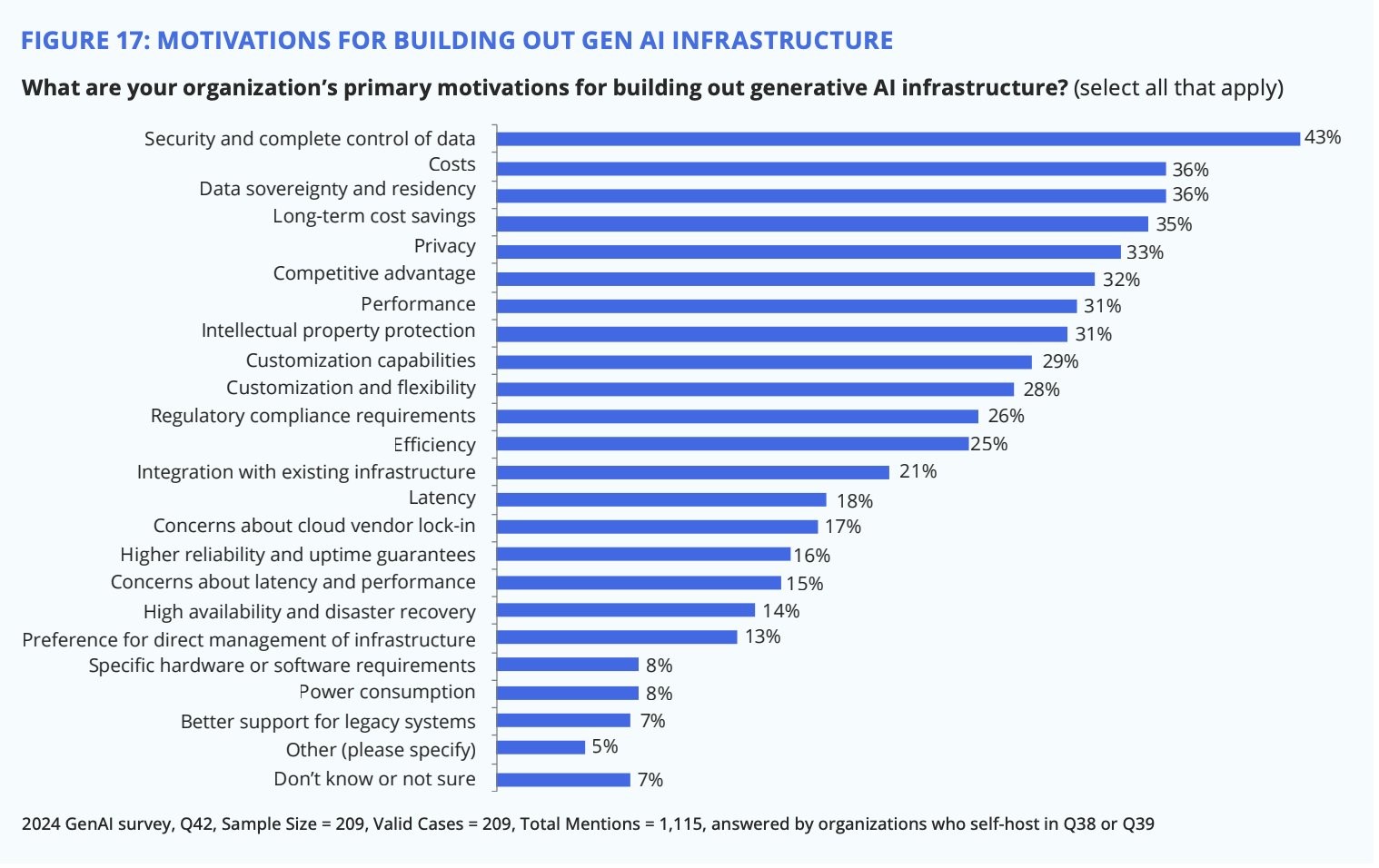

Organizations building their own GenAI infrastructure often cite security and data control (43%) as their top motivations, followed by cost (36%), data sovereignty (36%), and long-term savings (35%). Self-hosted and dedicated infrastructures enable a controlled environment, reducing risks of breaches and allowing for tailored security measures.

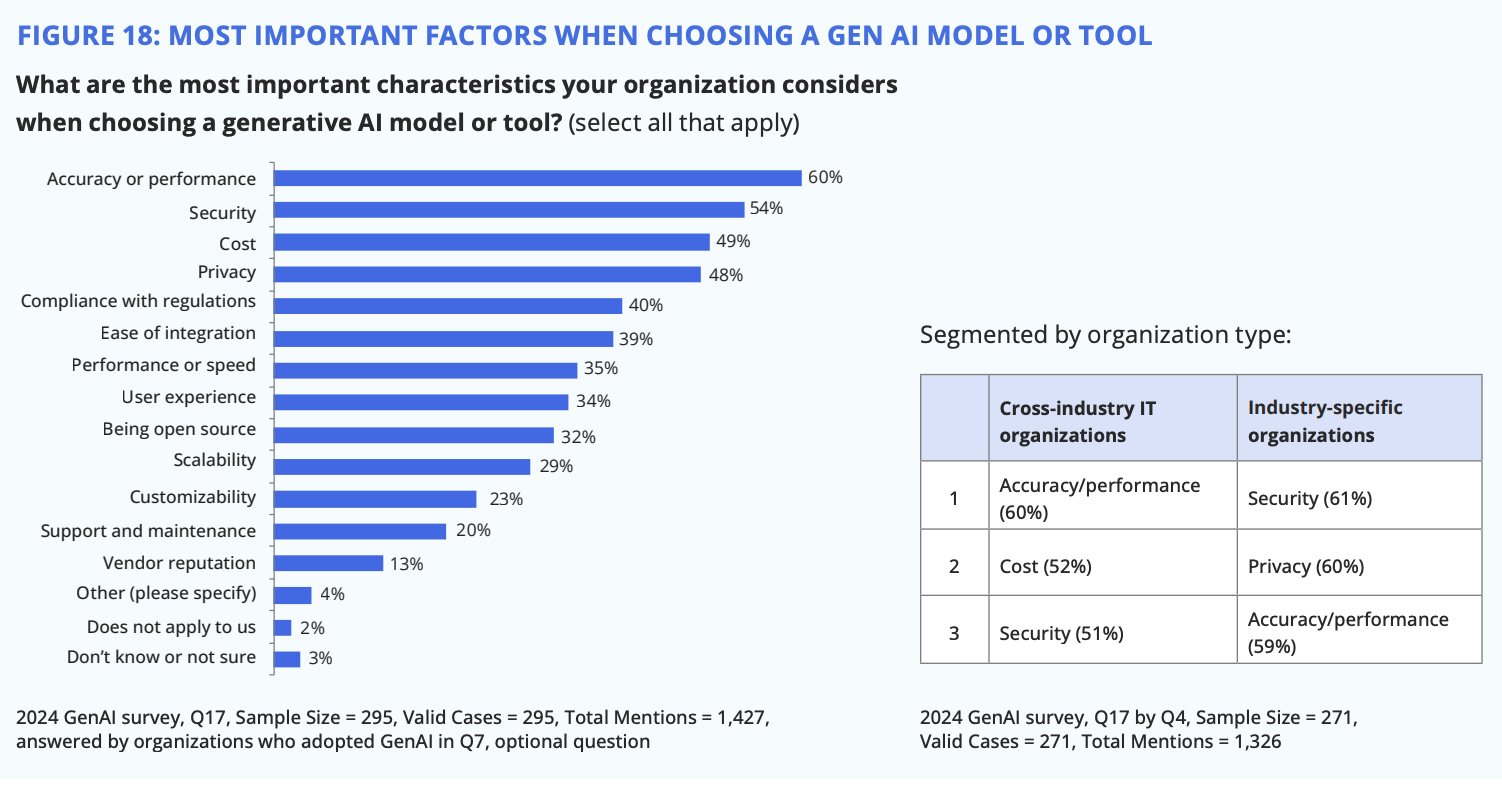

When selecting GenAI tools, accuracy and performance lead as key priorities (60%), with security (54%), cost (49%), and privacy (48%) following closely. These preferences highlight the need for solutions that are not only effective but also secure and cost-efficient.

Industry-Specific Insights

The report reveals variations in security priorities across sectors:

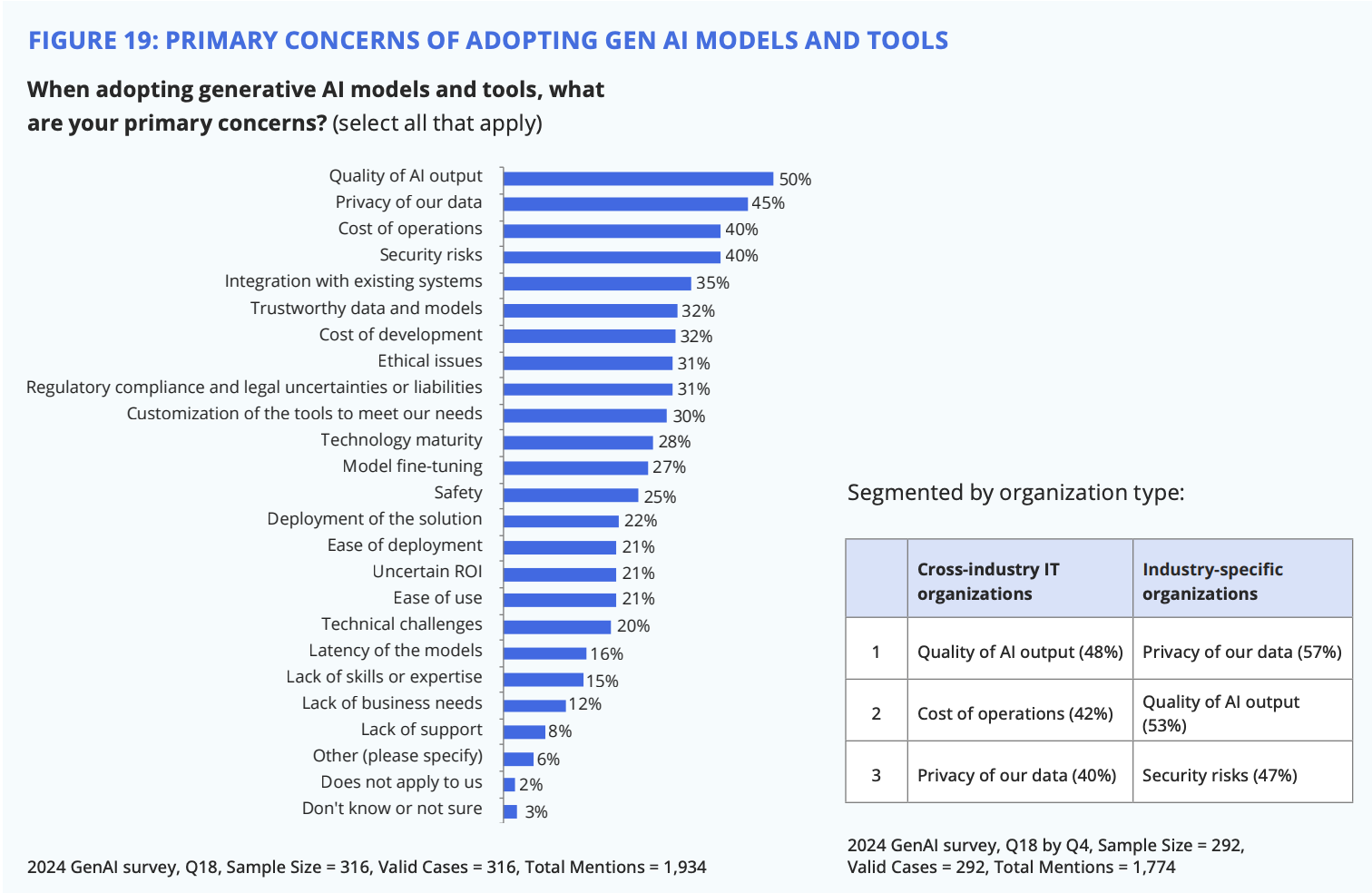

- Due to stringent regulatory demands, finance and healthcare organizations emphasize security (61%) and privacy (60%) .

- Cross-industry IT organizations prioritize accuracy and performance (60%), focusing on efficiency and ROI.

These differences reflect the diverse challenges organizations face across industries as they navigate GenAI adoption.

Overcoming GenAI Challenges

Key challenges include output quality, privacy, operational costs, and security risks. Addressing these concerns is essential for leveraging GenAI’s transformative potential while maintaining trust and compliance in sensitive domains.

We encourage you to read the full Shaping the Future of Generative AI report to explore these findings more deeply and explore actionable insights for your organization. We can build a future where openness, innovation, and security intersect to unlock new possibilities.