The Open Voice Trustmark Initiative, a Linux Foundation AI & Data project, has recently made significant strides in developing an innovative AI framework to support individuals with mental health issues. This framework, designed in collaboration with Diego Gosmar, Oita Coleman of the Open Voice Trustmark, and Elena Peretto from Fundació Ajuda i Esperança, provides essential tools for psychologists.

“Insight AI Risk Detection Model, Vulnerable People Emotional Situation Support,” authored by Gosmar, Oita Coleman, and Elena Peretto. This research was presented at the 28th International Conference on Evaluation and Assessment in Software Engineering (EASE 2024) held in Salerno, Italy, and published by ACM in New York, NY, USA.

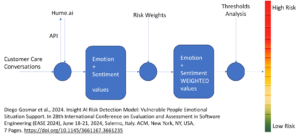

The paper introduces an AI-based risk detection model designed to provide real-time emotional support and risk assessment, particularly targeting the rise in mental health issues among youth. The model uses Insight AI for Sentiment and Emotional Analysis to evaluate synthetic interactions, drawing on insights from Fundació Ajuda I Esperança’s customer service for vulnerable youth aged 14 to 25. With over 7,000 chat interactions addressing issues like depression, anxiety, and relationship problems, the model underscores the importance of ethical AI use in mental health services and advocates for responsible deployment by non-profit organizations like Fundació Ajuda I Esperança.

The study underscores the growing prevalence of mental health issues worldwide, with approximately 20% of youth affected. The critical shortage of mental health professionals—sometimes as few as one per 10,000 individuals—highlights the urgent need for scalable, immediate emotional support. This gap makes Digital Mental Health Interventions (DMHIs), which provide diagnostics, symptom management, and content delivery, increasingly essential.

The Insight AI Risk Detection Model is a key innovation in this field, developed in collaboration with Fundació Ajuda I Esperança. The model is specifically designed to address the mental health needs of 14- —to 25-year-olds, a group that has seen a 25% rise in reported mental health issues over the past decade. The model uses AI to analyze conversation patterns and offer timely support while also navigating important ethical and legal considerations, such as informed consent and privacy protection.

The framework is designed for future enhancement and broader application through AI fine-tuning. It involves improving synthetic conversation generation, customizing via AI Model APIs, and optimizing for specific populations using Sequential Quadratic Programming (SQP) techniques. The AI model’s integration of sentiment and emotion analysis has produced strong results in sentiment analysis, though we still need to refine the emotional analysis.

Deploying such technology, particularly in sensitive areas like mental health support, requires a careful and ethically responsible approach. The model’s development is guided by a strong commitment to ethical principles, including model privacy, data security, and informed consent, which are crucial for its acceptance and scalability.

Two major challenges are addressed:

- The highly sensitive nature of these conversations, which raises privacy concerns,

- Cryptic communication styles are often found in interactions between young people and mental health professionals.

As the model evolves, its real-world application will require ongoing improvement based on feedback from service interactions and continuous collaboration with mental health experts. While the initial results are promising, they represent just the beginning of a broader dialogue and further research into the responsible use of AI in emotional and mental health support.

For those interested in exploring the details, the full paper can be accessed via this link.

Diego Gosmar, Elena Peretto, and Oita Coleman. 2024. Insight AI Risk Detection Model: Vulnerable People Emotional Situation Support. In 28th International Conference on Evaluation and Assessment in Software Engineering (EASE 2024), June 18–21, 2024, Salerno, Italy. ACM, New York, NY, USA.