The rapid growth of AI presents great potential but also introduces uncertainties and risks, such as bias, safety concerns, and potential misuse. LF AI & Data acknowledges the importance of a responsible approach to AI development and deployment. To address these challenges, we are excited to share the Responsible AI Pathways initiative, which promotes open collaboration and guides the responsible development and deployment of AI technologies.

Responsible AI Pathways: Growing from the Kernel of the Framework

Responsible AI Pathways: Growing from the Kernel of the Framework

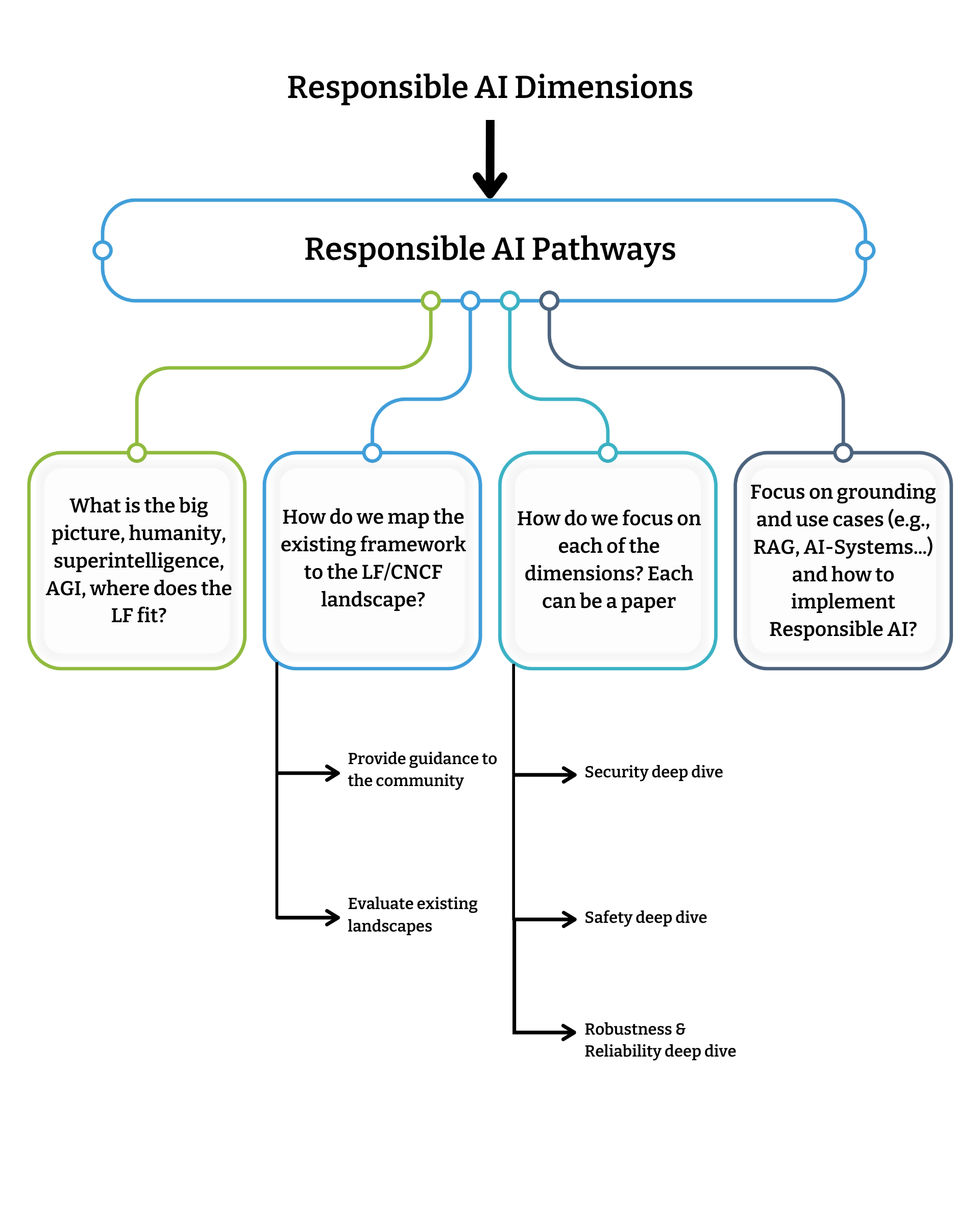

The Responsible AI Pathways Initiative builds upon the core principles/guidelines established in the Responsible AI Framework (which will be detailed further in a forthcoming publication). This framework lays the foundation for responsible AI and defines a taxonomy to identify and address potential challenges across nine key dimensions:

- Human-centered & Aligned: Ensuring AI systems prioritize human well-being and align with societal values.

- Accessible & Inclusive: Making AI accessible to everyone and promoting equitable outcomes.

- Robust, Reliable & Safe: Building AI systems that are dependable, secure, and perform consistently.

- Transparent & Explainable: Demystifying AI operations and providing clear explanations for decisions.

- Observable & Accountable: Establishing clear lines of responsibility for AI actions and outcomes.

- Private & Secure: Protecting user data and ensuring confidentiality.

- Compliant & Controllable: Adhering to legal and ethical standards, maintaining human oversight.

- Ethical & Fair: Promoting fairness, mitigating bias, and ensuring equitable treatment.

- Environmentally Sustainable: Minimizing the environmental impact of AI development and deployment.

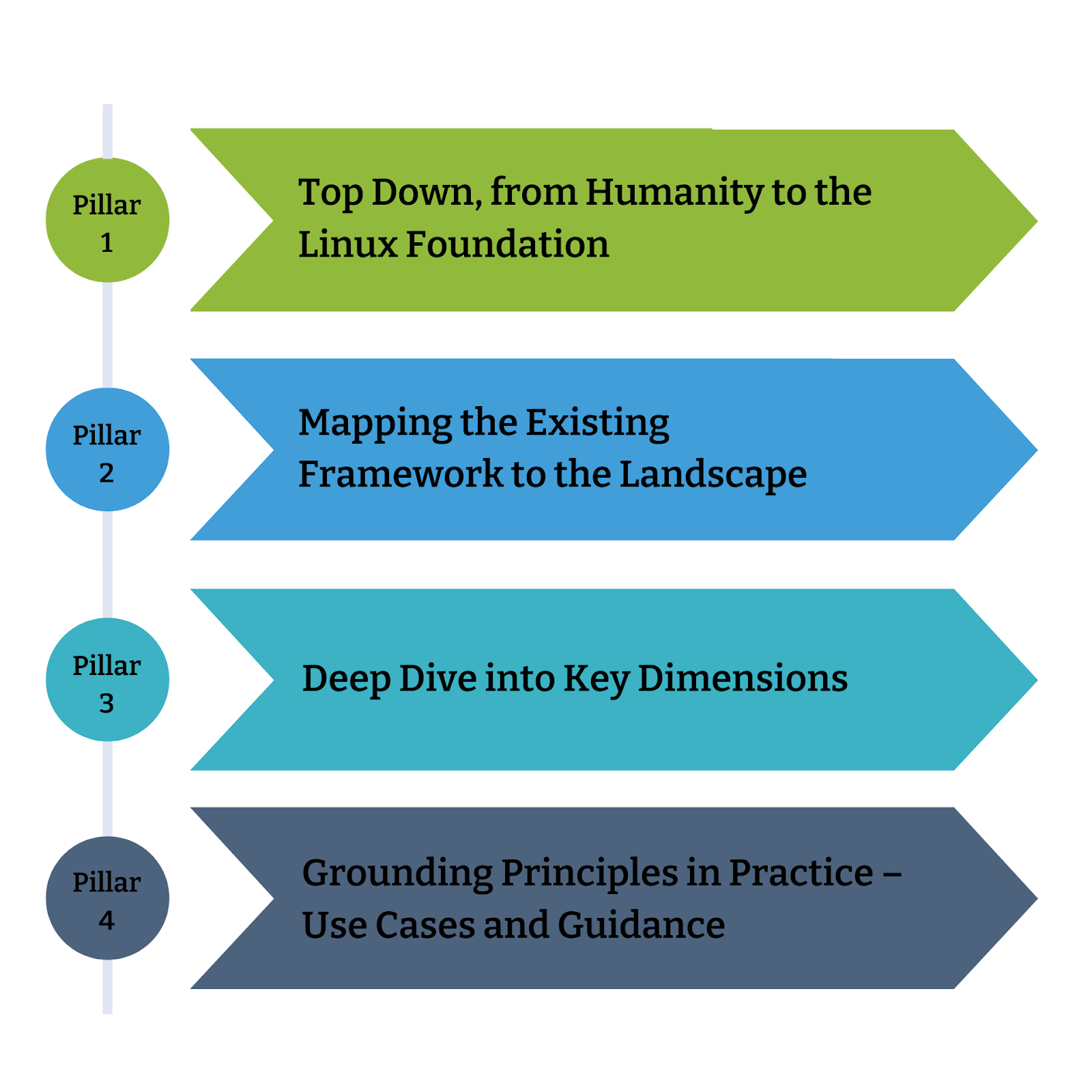

The Pathways initiative, as we will see in the coming sections, expands upon this foundation by translating these principles into concrete actions and strategies in one of four core pillars.

Pillar 1: Top Down, from Humanity to the LF AI & Data

The fast progress of AI demands that we consider the big picture before moving the pieces. How does LF AI & Data’s efforts contribute to humanity’s future alongside increasingly intelligent machines? This involves grappling with questions like:

- How can humanity adapt to and thrive with non-human (super) intelligence? This requires exploring the evolving relationship between humans and AI, considering ethical implications and societal impact.

- How do the LF’s existing AI and data initiatives fit into this broader context? We must ensure alignment between our current efforts and the long-term goals of responsible AI development.

Key concepts underpinning this exploration include:

- Intelligence vs. Conscientiousness: Defining the distinctions and interplay between these concepts is crucial for understanding the ethical implications of advanced AI.

- Post-Classical AI Paradigms: Exploring emerging paradigms like neuromorphic, quantum, and biological computing, and their implications for Responsible AI.

- Open Science and Open Source AI: Defining and promoting open practices for AI research and development to foster transparency and collaboration.

- Game Theory for Survival: Applying game theory principles to model human-AI interactions and develop strategies for cooperation and conflict resolution.

- Responsible AI Gap Analysis: Identifying current gaps in Responsible AI research and practice, focusing on both near-term challenges (Generative AI, LLMs) and long-term considerations (Superintelligence, Omnipresent Intelligence).

Pillar 2: Mapping the Existing Framework to the Landscape

This pillar focuses on taking stock of the existing AI landscape, both within the LF and externally. This involves:

- Analyzing Existing LF/CNCF Projects: classifying projects in accordance with the nine Responsible AI dimensions.

- Mapping the Broader Responsible AI Ecosystem: Understanding the work being done by other organizations, researchers, and initiatives to identify best practices, potential collaborations, and areas where the LF can make unique contributions.

- Identifying Gaps and Overlaps: Pinpointing areas where more focus is needed and ensuring that efforts within the LF are complementary and avoid duplication.

- Create a responsible AI landscape: Identifying projects already contributing to Responsible AI and exploring opportunities for integration and expansion. Additionally, explore creating a new landscape focused responsible AI

- Developing Community Guidance: Providing clear direction and resources for the LF/CNCF community to actively engage in Responsible AI initiatives. This may involve developing a new landscape specifically focused on Responsible AI within the LF.

Pillar 3: Deep Dive into Key Dimensions

This pillar delves into the core dimensions of Responsible AI, providing in-depth analysis and practical guidance:

Security: Addressing vulnerabilities in AI systems and protecting against adversarial attacks. This includes expanding the scope of existing initiatives like “Security for Cloud Native AI” (CNCF) to encompass non-CNCF projects and fostering collaboration on secure AI tools (e.g., homomorphic encryption, adversarial training).

Safety: Ensuring AI operates within safe boundaries, minimizing unintended harm, and building mechanisms for human oversight and exploring techniques like value alignment, explainable AI, and safety-critical system design.

- Focus: Address vulnerabilities in AI, protect against adversarial attacks.

- Example: Security for Cloud Native AI (CNCF).

- Expansion: Include non-CNCF projects, collaborate with CNCF on secure AI tools (homomorphic encryption, adversarial training).

- Related work:

Sustainability: Developing AI systems that are environmentally responsible, minimizing energy consumption and resource usage, and researching efficient algorithms, green hardware, and sustainable data center practices.

We will dive into the rest of the dimensions in the upcoming Responsible AI Framework paper, where we also explore the interoperability across the projects in those dimensions. Each dimension will be explored through dedicated white-papers/writeups, providing concrete recommendations and best practices for the community.

Pillar 4: Grounding Principles in Practice – Use Cases and Guidance

Making Responsible AI principles tangible requires grounding them in real-world applications:

- AI Systems (e.g., RAG): Focusing on responsible data sourcing, bias detection, and transparency in systems like Retrieval Augmented Generation. Analyzing ethical considerations in the design, development, and deployment of complex AI systems.

- Industry-Specific Applications: Examining the implementation of Responsible AI within specific sectors:

- Healthcare: Ensuring fairness, accuracy, and transparency in AI-driven diagnostics, treatment recommendations, and patient data privacy.

- Finance: Preventing biases in lending, risk assessment, and fraud detection.

- Other Sectors: Expanding to other relevant sectors as the initiative progresses.

To facilitate practical implementation, we will provide:

- Best Practices: Developing guidelines for responsible AI development and deployment.

- Open-Source Tools: Creating and sharing tools for bias detection, explainability, and privacy preservation.

- Qualifying New Projects: Introducing a process for evaluating new LF projects based on their adherence to Responsible AI principles.

- Education and Training: Creating resources, workshops, and certifications to empower developers and practitioners.

- Community Collaboration: Fostering open dialogue and cooperation among stakeholders to advance Responsible AI.

Join Us!

LF AI & Data invites everyone to join us in this initiative. By working together, we can ensure a future where AI benefits all of humanity minus the risk associated with adopting general-purpose technology.

Visit our website or join us on Slack to learn more, contribute, and help build a more responsible AI ecosystem.