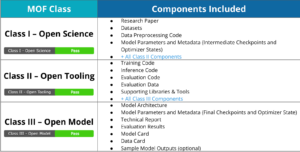

Last week, during the Linux Foundation’s AI_dev Europe conference in Paris, France, LF AI & Data released the Model Openness Tool (MOT) beta version. The MOT evaluates criteria from the Model Openness Framework (MOF) and assigns a score based on the availability of model elements and the licensing choice. This score determines the model’s classification into categories such as Open Science, Open Tooling, or Open Model. The MOT provides a practical, user-friendly way to apply the MOF framework to your model, producing a clear, self-service score.

The MOF is a comprehensive system for evaluating and classifying the completeness and openness of machine learning models. It addresses the challenges of open AI by assessing which components of the model development lifecycle are publicly released and under what licenses, ensuring an objective evaluation.

Challenges of Open AI

As AI continues to expand, ensuring the openness of AI models presents significant challenges:

- Lack of a Common Understanding of Openness in AI: There is no universal agreement on what defines openness in AI, leading to varied interpretations and implementations.

- Open Source Software Licenses on Non-Software Assets: Applying software licenses to datasets, models, and other non-software components can create confusion and legal complexities.

- Diverse Restrictions Including Acceptable Use Policies: These policies can restrict the use of AI models and their components, conflicting with the principles of openness.

- Lack of Understanding of License Implications in AI Contexts: Many developers and researchers are not fully aware of how different licenses impact the use and distribution of AI models.

- Incomplete Release of Model Components: Not all parts of an AI model are often made available, hampering transparency and reproducibility.

What is the Model Openness Framework?

The MOF provides a structured approach for evaluating the completeness and openness of machine learning models based on open science principles. It helps researchers and developers enhance model transparency and reproducibility while allowing permissive usage. The MOF aims to remove ambiguity by offering clear guidance on the availability of model components, licensing, and suitability for commercial use without restrictions.

The Model Openness Tool (MOT)

LF AI & Data created the Model Openness Tool (MOT) to implement the MOF. This tool evaluates each criterion from the MOF and generates a score based on how well each item is met. The MOT provides a practical, user-friendly way to apply the MOF framework to your model and produce a clear, self-service score.

How It Works

The MOT presents users with 16 questions about their model. Users need to provide detailed responses for each question. Based on these inputs, the tool calculates a score, classifying the model’s openness on a scale of 1, 2, or 3.

Why We Developed MOT

Our goal in developing the MOT was to offer a straightforward tool for evaluating machine learning models against the MOF. This tool helps users understand what components are included with each model and the licenses associated with those components, providing clarity on what can and cannot be done with the model and its parts.

Discover the Model Openness Framework and Tool

Explore the Model Openness Framework and the Model Openness Tool today to see how your models measure up. We invite model creators to use the MOT for their models and help us drive more openness, transparency, and understanding of licensing related to AI models.

LF AI & Data Resources

- Learn about membership opportunities

- Explore the interactive landscape

- Check out our technical projects

- Join us at upcoming events

- Read the latest announcements on the blog

- Subscribe to the mailing lists

- Follow us on Twitter or LinkedIn