Guest Author: Alka Roy, Founder, The Responsible Innovation Project

On behalf of the LF AI & Data’s Trusted AI Committee Principles Working Group, I am pleased to announce LF AI & Data’s Principles for Trusted AI. LF AI & Data is an umbrella foundation of the Linux Foundation that supports open source innovation in artificial intelligence, machine learning, deep learning, and data.

With these principles, the LF AI & Data Foundation is not only joining other open source and AI communities in adopting a set of ethical, responsible, and trust-based principles, it is also inviting the larger Linux Foundation community—19K+ companies and 235K+ developers to lead by trust and responsibility. According to its website, the “Linux Foundation enables global innovation by growing open technology ecosystems that transform industries: 100% of supercomputers use Linux, ~95% public cloud providers use Kubernetes, 70% global mobile subscribers run on networks built using ONAP, 50% of the Fortune Top enterprise blockchain deployments use Hyperledger.”

With such immense impact and scale, the responsibility to approach innovation with trust is immense. LF AI & Data’s AI principles are guided by a vision to expand access and invite innovation at all levels of engagement. The language of the principles has been kept simple and easy to understand, yet flexible, to help ensure flexibility and wider adoption. Not an easy task.

The Process

These principles were derived after over a year of deliberation which included parsing through the various industry, non-profit, and partner company’s AI principles, guidelines, contributions, and principles, while always keeping the community and social impact front and center. In addition to member companies’ and non-profit groups’ input, guidelines from OECD, EU, SoA, ACM, IEEE, DoD were also referenced. The key criteria balanced competing interests across the industry and companies with the need for open and innovative technology built with trust and accountability.

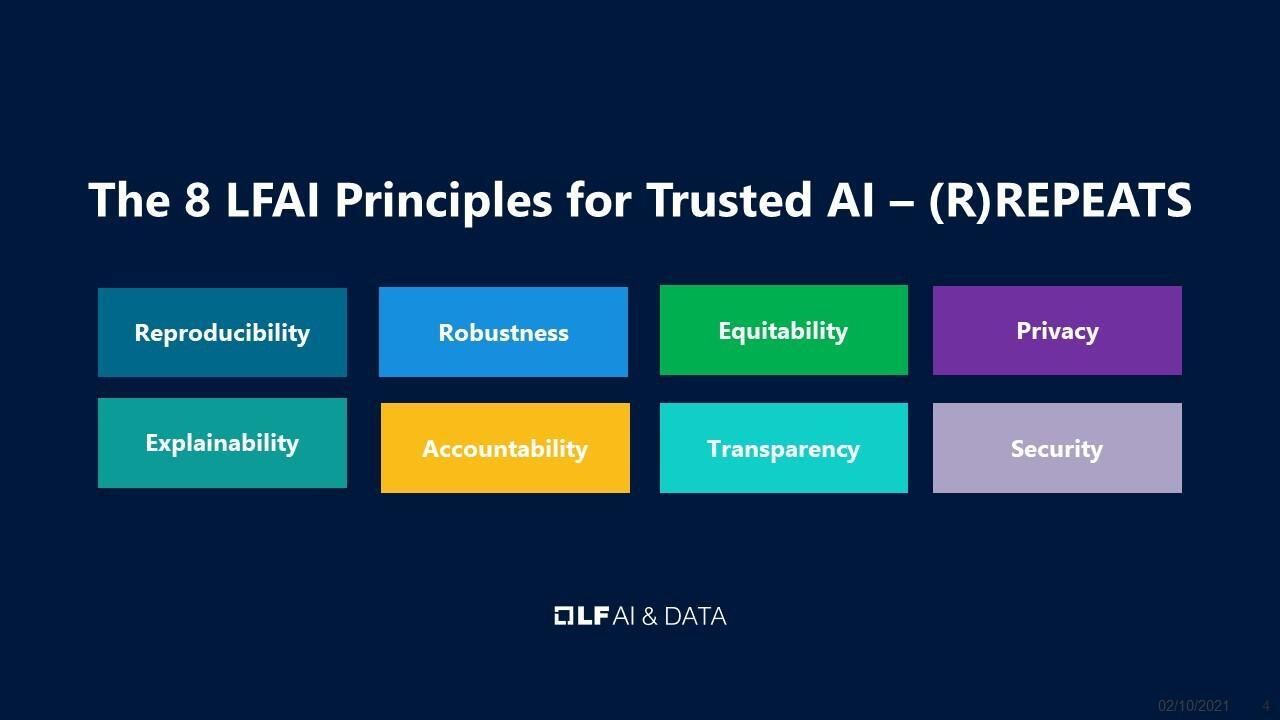

LF & AI Data Foundation AI Principles: (R)REPEATS

The (R)REPEATS acronym captures the principles of Reproducibility, Robustness, Equitability, Privacy, Explainability, Accountability, Transparency, and Security. The image below illustrates that a cohesive approach to implementation is needed. The order in which the principles are listed is not meant to denote hierarchy. Neither is this a list to pick and choose what is convenient. Rather, as so many discussions and efforts to implement AI principles in the industry and committee members in their ecosystem have illustrated, all these principles are interconnected and interdependent, and important.

Artificial Intelligence (AI) in the following definitions refer to and imply any flavor and use of Artificial Intelligence or a derivative of Artificial Intelligence–including but not limited to software or hardware, simple or complex systems that include machine learning, deep learning, data integrated with other adjacent technologies like computer vision whether created by people or another AI.

Artificial Intelligence (AI) in the following definitions refer to and imply any flavor and use of Artificial Intelligence or a derivative of Artificial Intelligence–including but not limited to software or hardware, simple or complex systems that include machine learning, deep learning, data integrated with other adjacent technologies like computer vision whether created by people or another AI.

- Reproducibility is the ability of an independent team to replicate in an equivalent AI environment, domain or area, the same experiences or results using the same AI methods, data, software, codes, algorithms, models, and documentation, to reach the same conclusions as the original research or activity. Adhering to this principle will ensure the reliability of the results or experiences produced by any AI.

- Robustness refers to the stability, resilience, and performance of the systems and machines dealing with changing ecosystems. AI must function robustly throughout its life cycle and potential risks should be continually assessed and managed.

- Equitability for AI and the people behind AI should take deliberate steps – in the AI life-cycle – to avoid intended or unintended bias and unfairness that would inadvertently cause harm.

- Privacy requires AI systems to guarantee privacy and data protection throughout a system’s entire lifecycle. The lifecycle activities include the information initially collected from users, as well as information generated about users throughout their interaction with the system e.g., outputs that are AI-generated for specific users or how users responded to recommendations. Any AI must ensure that data collected or inferred about individuals will not be used to unlawfully or unfairly discriminate against them. Privacy and transparency are especially needed when dealing with digital records that allow inferences such as identity, preferences, and future behavior.

- Explainability is the ability to describe how AI works, i.e., makes decisions. Explanations should be produced regarding both the procedures followed by the AI (i.e., its inputs, methods, models, and outputs) and the specific decisions that are made. These explanations should be accessible to people with varying degrees of expertise and capabilities including the public. For the explainability principle to take effect, the AI engineering discipline should be sufficiently advanced such that technical experts possess an appropriate understanding of the technology, development processes, and operational methods of its AI systems, including the ability to explain the sources and triggers for decisions through transparent, traceable processes and auditable methodologies, data sources, and design procedure and documentation.

- Accountability requires AI and people behind the AI to explain, justify, and take responsibility for any decision and action made by the AI. Mechanisms, such as governance and tools, are necessary to achieve accountability.

- Transparency entails the disclosure around AI systems to ensure that people understand AI-based outcomes, especially in high-risk AI domains. When relevant and not immediately obvious, users should be clearly informed when and how they are interacting with an AI and not a human being. For transparency, ensuring that clear information is provided about the AI’s capabilities and limitations, in particular the purpose for which the systems are intended, is necessary. Information about training and testing data sets where feasible, the conditions under which AI can be expected to function as intended and the expected level of accuracy in achieving the specified purpose, should also be supplied. And finally,

- Security and safety of AI should be tested and assured across the entire life cycle within an explicit and well-defined domain of use. In addition, any AI should be designed to also safeguard the people who are impacted.

In addition to the definitions of the principles shared here, further descriptions and background can be accessed at LF AI & Data wiki.

The Team

The list of principles was reviewed and proposed by a committee and approved by the Technical Advisory Council of LF AI & Data. The Trusted AI Principles Working Group was chaired by Souad Ouali (Orange) and included Jeff Cao (Tencent), Francois Jezequel (Orange), Sarah Luger (Orange Silicon Valley), Susan Malaika (IBM), Alka Roy (The Responsible Innovation Project/ Former AT&T), Alejandro Saucedo (The Institute for Ethical AI / Seldon), and Marta Ziosi (AI for People).

What’s Next?

This blog announcement serves as an open call for AI Projects to examine and adopt the Principles – at various stages in their life-cycle. We invite the LF AI & Data community to engage with the principles and examine how to apply them to their projects and share the results and challenges within the wider community of the Linux Foundation as well as LF AI & Data.

Open source communities have the tradition to take standards, code or ideas, put them into practice, share results, and evolve them. We also invite volunteers to help assess the relationship of the Principles with existing and emerging trusted AI toolkits and software to help identify any gaps and solutions. The LF AI & Data Trusted AI Committee is holding a webinar hosted by the Principles Working Group members to present the principles, solicit feedback and continue to explore other options to engage the larger community.

Call to Action:

- Join the Principles Committee and help develop strategies for applying the principles.

- Apply principles to your projects and share feedback with the Trusted AI community.

- Register for the upcoming Webinar or submit your questions to the committee.

It’s only through collective commitment and habit of putting these principles into practice that we can get closer to building AI that is trustworthy and serves a larger community.

LF AI & Data Resources

- Learn about membership opportunities

- Explore the interactive landscape

- Check out our technical projects

- Join us at upcoming events

- Read the latest announcements on the blog

- Subscribe to the mailing lists

- Follow us on Twitter or LinkedIn

- Access other resources on LF AI & Data’s GitHub or Wiki